The Turing Test and Artificial Intelligence

The Turing Test is a classic method used to evaluate whether an artificial intelligence can communicate in a way that is indistinguishable from a human. In this test, a human judge engages in a text-based conversation with a computer, and the machine attempts to convince the judge that it is human. If the judge cannot reliably tell the difference, the machine is considered to have passed the test. In 2014, a chatbot named Eugene Goostman reportedly fooled judges in several conversations. In recent years, models like ChatGPT have become far more convincing in human-like dialogue. Newer versions can generate responses that feel natural and context-aware, making them strong candidates in Turing-style evaluations. However, no AI model has officially passed a strict, fully controlled Turing Test to date.

What Is Humanity’s Last Exam (HLE)?

Humanity’s Last Exam (HLE) is a modern benchmark designed to test advanced reasoning and knowledge capabilities of artificial intelligence systems. The exam consists of approximately 2,500 expert-level questions spanning a wide range of disciplines, including mathematics, physics, biology, medicine, humanities, computer science, engineering, and chemistry. About 14 percent of the questions include visual elements such as charts, diagrams, and images, requiring models to combine textual and visual reasoning.

Unlike traditional benchmarks, HLE focuses on complex, multi-layered problem solving rather than pattern recognition. Many researchers refer to it as the “last exam for humanity” because it is designed to challenge even the most advanced AI models. If an AI system were to perform at a human-expert level on this exam, it would indicate a major leap toward human-level intelligence.

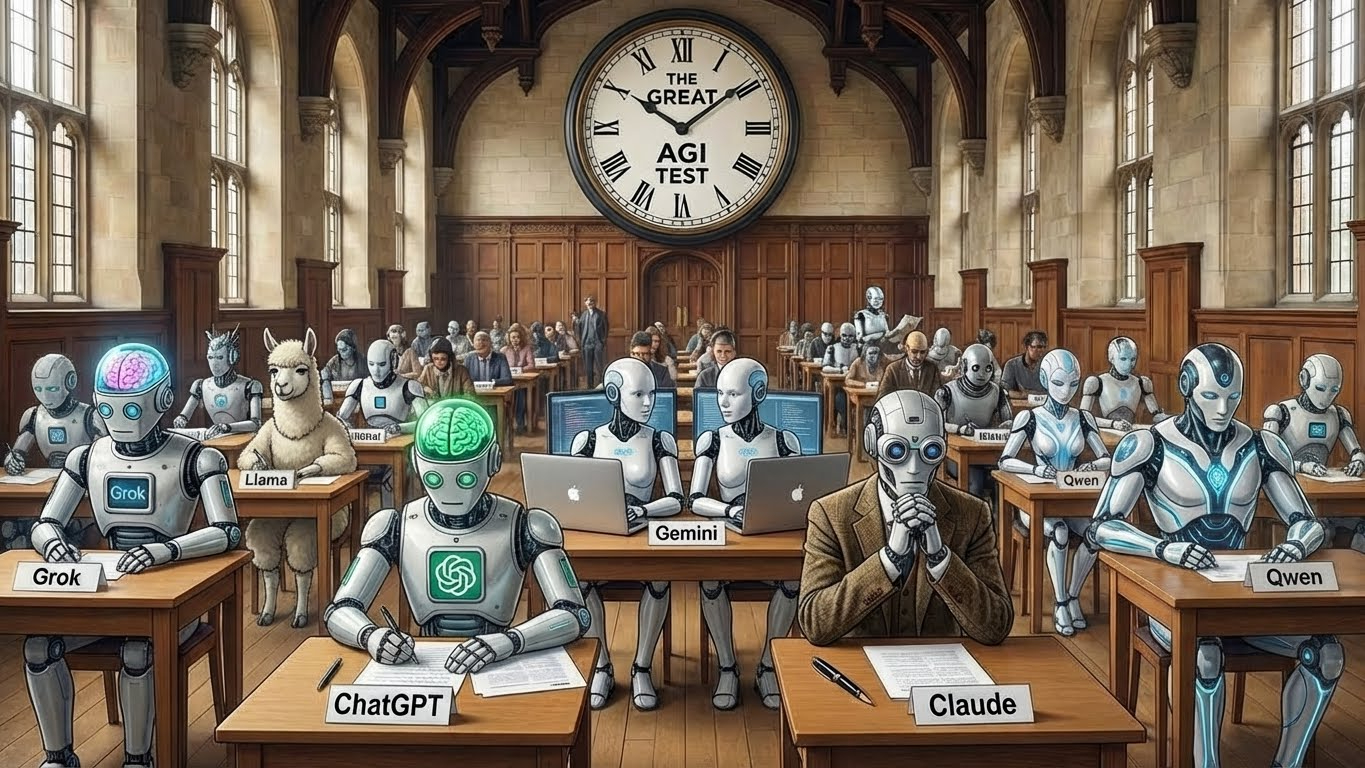

Performance of Leading AI Models

So far, no artificial intelligence system has matched human expert performance on Humanity’s Last Exam. Among currently evaluated models, Google’s Gemini 3 Pro has achieved one of the highest scores, with approximately 37.5 percent accuracy. OpenAI’s GPT-5 Pro follows with around 31.6 percent, while Anthropic’s Claude 4.5 scores close to 25.2 percent. Other well-known models such as Mistral, Llama, and various enterprise systems have shown lower results.

For comparison, human experts typically score close to 90 percent on the same exam. This gap highlights the difference between appearing knowledgeable and truly understanding complex concepts. While AI models can process vast amounts of information, they still struggle with deep reasoning, intuition, and creative problem solving.

In contrast, Turing-style evaluations tell a slightly different story. Modern conversational models can be highly persuasive in short dialogues, often producing responses that feel indistinguishable from those of a human. In controlled conversational settings, some users may mistake AI-generated answers for human ones. However, this does not mean that AI has fully reached human intelligence; rather, it shows that conversational fluency alone is not a complete measure of understanding.

What High AI Scores Could Mean for Humanity

If future artificial intelligence systems begin to achieve human-level or higher scores on tests like Humanity’s Last Exam, it would signal a transformative moment in technological history. Such progress could dramatically impact scientific research, education, healthcare, and decision-making processes. AI systems might assist in complex medical diagnoses, accelerate scientific discoveries, or provide expert-level analysis across multiple fields.

At the same time, these advancements would raise important questions about the role of humans in a world where machines can reason at comparable levels. Work environments, education systems, and creative industries could undergo major shifts. High-performing AI would represent both an extraordinary opportunity and a serious responsibility, requiring careful consideration of how such intelligence is developed and applied.

Ultimately, the results of AI exams like the Turing Test and Humanity’s Last Exam remind us that artificial intelligence is rapidly evolving. While current systems have not yet surpassed human experts, their steady progress suggests that the line between human and machine intelligence will continue to blur, shaping the future of humanity in profound ways.